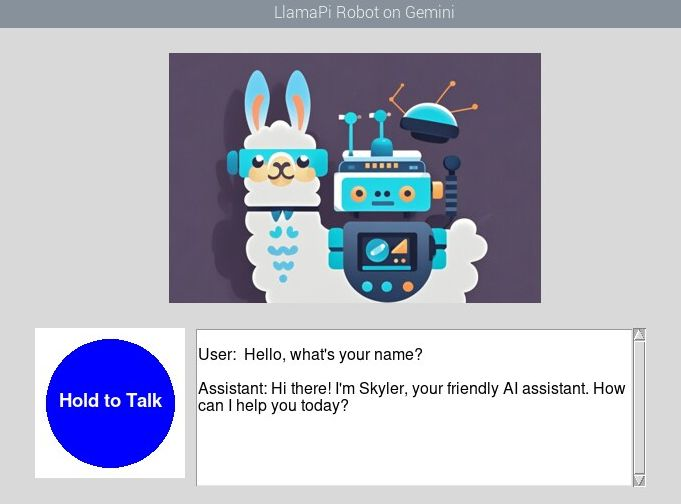

LlamaPi Update - Gemini Support

Ping Zhou, 2024-10-27

LlamaPi now supports Gemini as its backing LLM, in addition to local LLM and Coze.

On Gemini side:

- Test the prompt (system instruction) for using with LlamaPi.

- Create an API key (may need to create a project first).

On LlamaPi side:

- Create a wrapper using Gemini Python SDK.

In order to support multiple backing LLMs, major refactor was done to the LlamaPi code.

- Create a common base class

LlamaPiBasefor all three scenarios (local, Coze, Gemini). The base class includes the common functionalities like the UI, audio, ASR, etc. - Created subclasses for local LLM, Coze and Gemini respectively. They extend the base class and implement the logic for interacting with backing LLMs.

With this refactor, it will be easier for me to add support for other LLMs in the furture.